In the video to Find My Way, Paul McCartney doesn’t look a day over, uh, the year 1966-67 when the ‘Paul is dead’ theory started working its way around the world, fuelled by John Lennon singing “Here’s another clue for you all / the walrus was Paul” on Glass Onion, having the singer shown barefoot, walking out of step with his bandmates on the cover of Abbey Road album, and playing Revolution 9 backwards sounds like hearing the words “turn me on, dead man” on repeat.

What Paul has helped to do on the 2021 video is simply ‘de-age’, the hip word Silicon Valley has been marketing for a few years. On the McCartney III Imagined edition of Find My Way (featuring Beck), director Andrew Donoho makes a Beatle-worthy young Paul dance through a hotel hallway (and more), culminating in a pull-off-the-mask moment to reveal Beck.

But the deepfake-driven video — co-produced by Hyperreal Digital — shows a twenty-something Paul who is so unlike the 79-year-old singer. And it obviously makes us question the ethical aspect of a digital facelift.

“The technology to de-age talent and have them perform in creative environments like this is now fully-realised, even with one of the most recognised faces in the world,” said Hyperreal chief executive Remington Scott.

De-ageing tech is growing up

Robert De Niro looks visibly young in the film The Irishman. Picture: Netflix

Perhaps the best use — or at least the most talked about use — of de-ageing has been in the Martin Scorsese-directed film The Irishman in which the characters of Robert De Niro, Al Pacino and Joe Pesci have been given a digital treatment by Pablo Helman and his team at Industrial Light & Magic.

To offer a basic understanding of the technology, there are two simple ways to implement it — have the actors on a motion-capture stage, wearing headgear and face-tracking dots, like 52-year-old Will Smith did in Ang Lee’s Gemini Man, or go the Scorsese way, direct the film like any other but with some attachments to the camera set-up. In The Irishman there was no mo-cap or headgear as the story moves to and fro, from 1949 to 2000. Helman came up with a camera rig that had the director’s camera in the centre while on either side were two film-grade Alexa Mini cameras that captured infrared images, helping pick up volumetric information.

The entire process ultimately took around four years. In 2015, Scorsese and Helman tested de-ageing visual effects by having Robert De Niro come over to recreate a scene from Goodfellas. And then the film went into production while post-production obviously took time as Helman had hours of footage and gigabytes of data to sift through and come up with digital tweaks. The team, in fact, created a software called Flux to help the process.

Various de-ageing techniques are being used, like an aged-down Samuel L Jackson in Captain Marvel to reimagine the character of Nick Fury in 1995, Michael Douglas as Hank Pym in Ant-Man And The Wasp and Robert Downey Jr. as Tony Stark in Captain America: Civil War. The implementation has been outstanding to poor. But it’s the efforts of director David Fincher and his team that’s best remembered. In The Curious Case of Benjamin Button, Mova camera system was used to capture Brad Pitt’s facial expressions; it involved placing a series of cameras around an actor to gather facial movements and then usage of that data to digitally build someone older or younger.

Beyond big-budget deepfakes

Earlier this year, deepfake technology found a way to the genealogy website MyHeritage. The tool that’s being offered allows users to animate old photographs of loved ones and the outcome is a short, looping video in which people can be seen moving their heads and smiling.

Deepfake technology doesn’t need a Hollywood-scale set-up. A few months ago, Tom Cruise videos were circulated in which we saw a digital impersonation of the Mission: Impossible star. Chris Ume, the visual effects artist in Belgium who created the videos, said in an interview that a lot of expertise and time were spent making it. What you see in the videos is the body and voice of Miles Fisher, a Tom Cruise impersonator. Only the face, from the forehead to the chin, of the real Tom Cruise is shown in the videos.

Audio deepfake

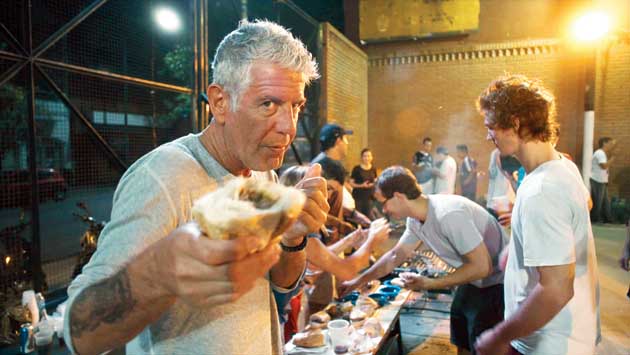

Anthony Bourdain’s voice has been simulated for a few seconds in a new documentary titled Roadrunner

Besides video, voice cloning is also being used, like on the just-released documentary Roadrunner: A Film About Anthony Bourdain, which captures the punchy world of the chef who died three years ago, at the age of 61. Director Morgan Neville uses archive footage to bring alive the magic of Bourdain but it’s 45 seconds of the 118-minute film that’s attracting all the attention.

Neville has used AI technology to digitally re-create Bourdain’s voice with software synthesising the audio of three quotes from the television host, the director has told The New Yorker. The matter came up when the journalist Helen Rosner asked Neville how he managed a clip of Bourdain reading an email he had sent to a friend. Neville said he had contacted an AI company and gave it a dozen hours of Bourdain speaking.

Use of AI in text-to-speech research is not new and start-ups like Descript offer voice-cloning software. But nobody has confirmed the firm that has recreated Bourdain’s voice.